Building scalable software is one of the most crucial challenges in modern software development. With businesses growing at an unprecedented pace, software applications must be able to handle increasing amounts of data, users, and complexity. Scalable software ensures that an application can grow seamlessly without compromising performance, reliability, or user experience. Whether you are working on a startup project or a large enterprise application, scalability is a non-negotiable aspect of software design. In this article, we will explore the key strategies and techniques to build scalable software, covering everything from architecture decisions to monitoring systems.

What Is Scalable Software?

Scalable software refers to an application or system that can handle an increased load without compromising performance, efficiency, or reliability. Scalability is important for systems that anticipate growth, especially when user traffic, data volume, or feature demands rise. Scalable software ensures that as the system grows, it can continue to meet user expectations, perform efficiently, and avoid downtime or slow performance.

Scalability is typically divided into two categories:

- Vertical Scalability (Scaling Up) – This involves adding more resources (e.g., CPU, RAM, storage) to a single server to improve its performance.

- Horizontal Scalability (Scaling Out) – This involves adding more servers or instances to distribute the load across multiple systems, ensuring that no single machine is overwhelmed.

The approach to scalability depends on factors like anticipated traffic, budget, and system architecture. To build scalable software, developers need to consider various factors ranging from the design phase to deployment, testing, and maintenance.

Key Strategies for Building Scalable Software

1. Design for Modular Architecture

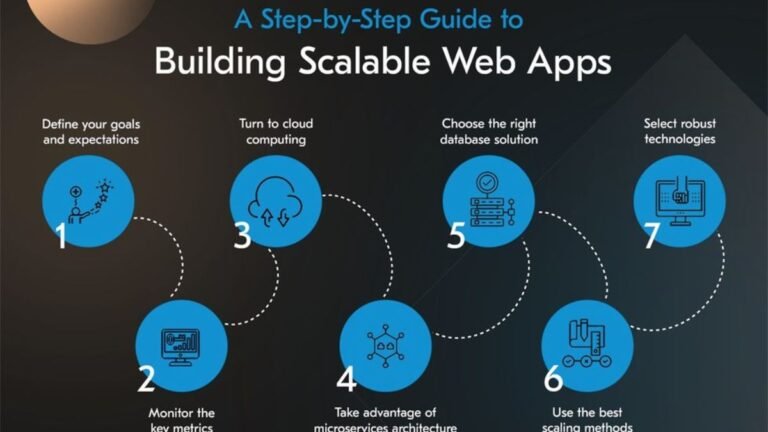

The first step in building scalable software is to design it with a modular architecture. A modular architecture divides the system into smaller, independent components or services that can operate autonomously. This approach, often referred to as microservices architecture, helps developers focus on individual parts of the system and scale each component independently based on demand.

- Microservices vs. Monolithic Architecture:

- Monolithic Architecture involves building the entire application as a single unified block, which can quickly become difficult to scale due to its tight coupling. As a result, scaling requires deploying the entire system as a whole, even if only one part needs additional resources.

- Microservices Architecture, on the other hand, involves breaking the system into smaller services, each handling a specific task. With microservices, scaling becomes more efficient because individual services can be scaled independently based on specific needs.

By designing your software in a modular way, you allow each service to grow and evolve independently. This increases flexibility and efficiency, both for scaling and for future updates.

2. Use Load Balancing for Traffic Distribution

Load balancing is a technique used to distribute incoming network traffic across multiple servers. This ensures that no single server becomes a bottleneck, improving both the performance and reliability of the software. A load balancer sits between the users and the servers, directing requests to the server best suited to handle the load at any given time.

There are several ways to implement load balancing:

- Round-Robin Load Balancing: Distributes requests evenly across all servers, making it useful for environments with similar servers and workloads.

- Weighted Load Balancing: Directs traffic to specific servers based on their capacity, ensuring that more powerful servers receive more traffic.

- Least Connections Load Balancing: Sends traffic to the server with the fewest active connections, helping to avoid overloading servers.

By using load balancing, software can scale horizontally, ensuring that user demand is distributed efficiently and that resources are used optimally.

3. Leverage Cloud Infrastructure for Elasticity

The cloud has revolutionized the way businesses scale their software applications. Cloud platforms like AWS, Google Cloud, and Microsoft Azure provide on-demand computing resources that can be provisioned and de-provisioned as needed. This concept, known as elasticity, allows businesses to scale up or down quickly in response to traffic changes, providing both cost-efficiency and flexibility.

Cloud infrastructure offers several benefits:

- Auto-scaling: Cloud platforms can automatically add or remove resources based on load, ensuring that your software always has the necessary capacity to handle traffic spikes.

- High Availability: Cloud services often include features like load balancing and fault tolerance, ensuring that your application remains available even if individual servers or data centers experience issues.

- Global Reach: Cloud providers have data centers all over the world, allowing you to deploy your application close to your users, improving latency and performance.

By adopting cloud infrastructure, you gain the flexibility to scale your software efficiently without the need to invest in physical hardware or deal with complex server management.

4. Implement Caching Strategies

Caching is one of the most effective ways to optimize the performance of scalable software. It involves storing frequently accessed data in memory so that it can be quickly retrieved when needed, without having to query the database or perform expensive computations every time. Caching reduces load on backend systems and speeds up response times, which is especially crucial for applications with high user traffic.

There are two main types of caching:

- Client-Side Caching: Involves storing data on the client’s device (browser or application) for future use. This is effective for static resources like images, stylesheets, or JavaScript files.

- Server-Side Caching: Involves storing data on the server side (often in an in-memory data store like Redis or Memcached) to improve the performance of dynamic content generation.

By strategically implementing caching mechanisms, you can significantly reduce response times and prevent bottlenecks in your application.

5. Optimize Database Performance

Databases are often a bottleneck in scalable software systems. As data grows, queries can become slower, and maintaining consistency across distributed systems becomes more challenging. Therefore, it is critical to optimize database performance to handle larger datasets and higher loads.

Here are a few techniques to optimize database performance:

- Sharding: Involves splitting the database into smaller, more manageable pieces (called shards), each of which is hosted on a separate server. This improves performance and allows for horizontal scaling.

- Database Replication: Involves copying data from one database server to another, creating redundant copies that can handle read traffic. This increases availability and scalability, especially for read-heavy applications.

- Indexing: By indexing frequently queried fields, you can speed up search operations and reduce the load on the database.

Additionally, using NoSQL databases (such as MongoDB or Cassandra) for certain use cases can offer better scalability for large datasets, while traditional relational databases (like PostgreSQL or MySQL) are suitable for applications requiring strong consistency.

6. Prioritize Asynchronous Processing

Asynchronous processing allows tasks to be executed in the background, freeing up resources and improving the responsiveness of your software. Instead of waiting for tasks like sending emails, processing data, or calling external APIs to complete, your software can continue to serve other requests while these tasks run in parallel.

Common techniques for implementing asynchronous processing include:

- Message Queues: Systems like RabbitMQ or Kafka can queue up tasks that need to be processed later. Workers can then pull tasks from the queue and handle them asynchronously.

- Event-Driven Architectures: This involves triggering actions in response to specific events, enabling your software to respond dynamically to real-time changes in user behavior or external systems.

Asynchronous processing helps to decouple tasks, improves performance, and makes your software more responsive, which is essential for scaling efficiently.

7. Monitor and Optimize Performance Continuously

Even after implementing scalability strategies, it’s essential to monitor the performance of your software continuously. Tools like Prometheus, Grafana, and New Relic can help you track system performance, identify bottlenecks, and make data-driven decisions to optimize resource allocation.

Regular performance testing (e.g., load testing and stress testing) can help uncover potential scalability issues before they become critical. Additionally, profiling tools can provide insights into specific areas of your code that need optimization.

Optimization is an ongoing process. By continuously measuring performance and applying adjustments, you can ensure that your software remains scalable and efficient over time.

Conclusion

Building scalable software requires careful planning, the right architectural decisions, and the use of appropriate technologies. From designing modular architectures to optimizing database performance and leveraging cloud resources, the strategies outlined above provide a solid foundation for ensuring your application can handle future growth. However, scalability is not a one-time effort; it’s an ongoing process that demands continuous monitoring, testing, and optimization.

By following these key strategies and techniques, you will be better equipped to build software that can grow with your business, serve a large user base, and deliver a seamless experience, no matter how much demand is placed on it. Scalability is not just about handling growth; it’s about providing your users with a high-performance, reliable application that can adapt to changing needs.